September 29, 2020

Speeding Up The Website

September 29, 2020

In my last post way back yesterday, I lamented how the site was getting bloated and slow. So I decided to do something about it. This is another post that is going to get all computerish, so you might want to hit that Random link in the sidebar and get out of here.

Much of what is on your screen is generated on the fly. For example, the menu structures in the sidebar. These are rather computationally expensive to create, and often don't change from one use to the next, so they are a good candidate for caching. A few of the menu options are site-wide, but most of the menus are specific for each page, and some are random. The sitewide items are easy to handle, and you don't want to cache the random items, or they would stop being random!

That leaves the page-specific menus, but it turns out that WordPress has a nice way to store things like that. I wrote a few very small functions to use the built-in WordPress structure, as well as clear the caches when necessary. Then I bookended each of the menu functions with one line of code at the beginning to check the cache, and one line at the end to write it. If the 'checker' gets a result, it uses it and aborts the rest of the function. Otherwise the function executes, and just before the end writes the result to the cache for next time.

It's actually really elegant, as programmers like to say. I didn't have to rewrite everything, in fact I barely modified the code - just one new line at the start and one new line at the end of any function that I want to cache the output of. It may not be the absolute most efficient way of doing it, but it was easy and foolproof, and there's a lot to say for that. And I can turn it off any time I want and the site will revert to non-caching.

Then I had to decide when and where to invalidate the cache. I thought about it, and there is no telling where some changes might ripple through to, so the simplest thing is to just flush the whole cache on any change. Any time I save, delete, or undelete a post, the whole cache is emptied and starts over. That may seem inefficient, but so what, it is simple and foolproof. The final part of the puzzle was adding some controls to turn the caching on and off, and also empty the cache manually if necessary.

The worst thing a caching scheme could do is increase trips to the database. Those are very expensive. In some cases, caching is replacing many trips to the database with just one. For pages that are seldom hit, caching won't help much, it may even hurt. The cache may get flushed before it is ever used. But for something like the Home page, the cache will get hit over and over. Across the whole site, it is a win.

Informal testing shows that the caching is working. Page generation times are roughly half when the cache has been written, compared to a first access. That's not entirely fair, as I have added overhead for writing the cache to the first access. But I think I am seeing some major improvements, in some cases an order of magnitude. Almost all second page loads are now under 0.2 seconds server time. How long that takes to get down the wire to you, that's another story.

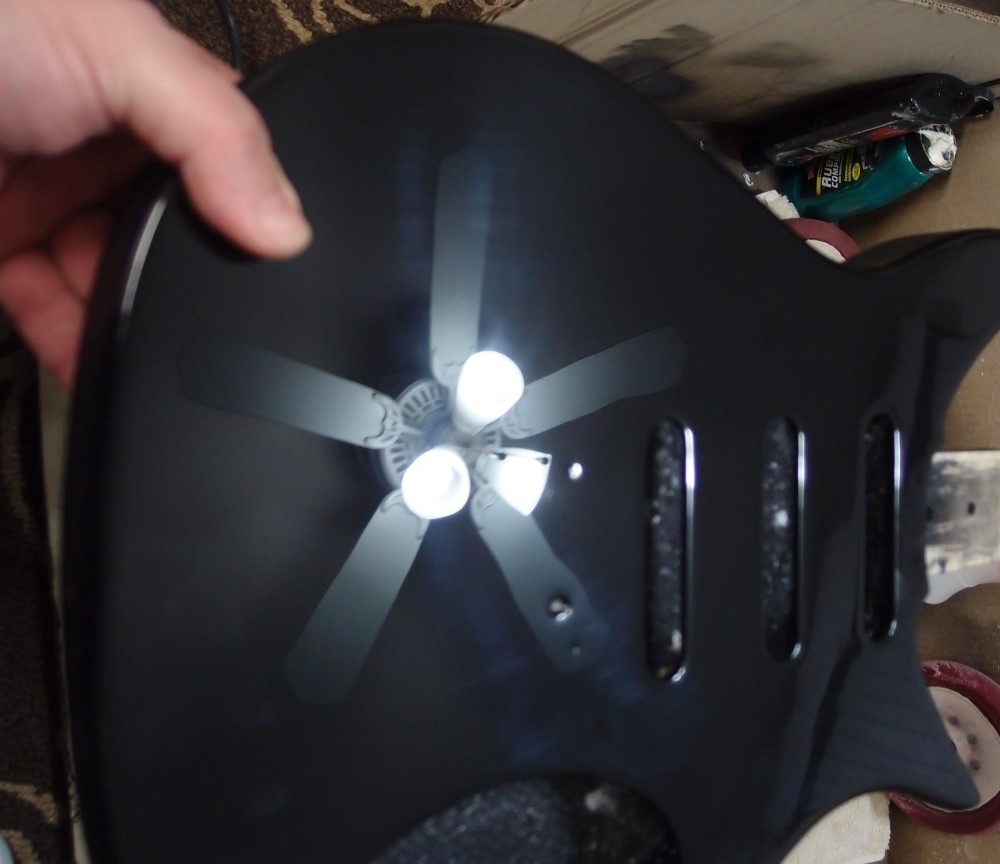

The image at the top of this post has nothing to do with anything, I just like it - it is colorful.

Why not just use a caching plugin, like WP-SuperCache? Nowadays, those plugins are fairly trouble-free, and for most people that would probably work. But as I've said before, I don't trust plugins. Thousands of lines of somebody else's junk. ( You should see the awful examples in the WordPress Codex! ) Also, a plugin like that caches a snapshot of the entire page, which means that any parts that you would like to be active, won't be. Anything random will cease to be random, and the counters would freeze.

Even worse would be the web host caching pages at the server level. You have no control over that whatsoever. I would get very angry and cancel my account if they started doing that. By default they already snuck a caching plugin into WordPress, which I found and got rid of.

And when I click that Update button, all the caches in the site will be flushed again. I think I have come up with a pretty good system.

Update:

After letting the new caching system run for a day, I saw that it was creating a lot of caches that were not really useful. That was a result of my making minimal changes to the code. So I decided to make more than minimal changes, seeing how the system runs very well, but could be better. Now it only caches the end results - the main navigation structures - and not all the intermediate results that go into building the final product. Now each page has just two or three cache entries, instead of a dozen. I added some tracking in the dashboard to see if I am blowing up the database with cache entries.

Another factor in page load times is php compilation. The server 'compiles' each php script prior to loading it, and caches the compiled version for a time. There is no way to know the specifics of this.

While I was at it, I looked at a lot of options I had set up and never used, and disabled them. So the theme is more streamlined and theoretically faster. It's time to bump the version number to Simple 2.0.

Update:

I did some more-scientific testing, and with all my optimizations, the website runs 2-4 times faster, measured in server CPU time. So ...

and

The cache flushed itself automatically for the first time Sunday morning just after midnight. By the time I got up, it was already 40% rebuilt. At this writing 12 hours later, it is now 61% rebuilt. I didn't expect that to happen so fast. I wonder how long it will take to get to 90%? I don't think it will get higher than that before it flushes again next Sunday.

Except for a few exceptions, a human can't really tell the difference between a cached page and an un-cached one - our sense of time is not that fine. But it makes a huge difference to a computer! And I guess the old engineer in me was just bothered by the inefficiencies I knew were there. WordPress is a giant pig already, and I have it doing a lot extra. It doesn't help to make it worse.

Caching the slideshows also makes a measurable difference, especially the big ones. I'm now satisfied with the performance, and have temporarily run out of new ideas to program. So I will add some more guitars.

Update:

Two days later, the cache is 90% rebuilt. I had to think about that: all the random bots that I am ignoring - 51% of the total traffic - are doing that for me. I guess they're good for something after all.